PhdPro

Visions of self-replicating nanomachines that could devour the Earth in a "grey goo" are probably wide of the mark, but "radical nanotechnology" could still deliver great benefits to society. The question is how best to achieve this goal.

Nanotechnology is slowly creeping into popular culture, but not in a way that most scientists will like. There is a great example in Dorian – novelist Will Self’s modern reworking of Oscar Wilde’s The Picture of Dorian Gray. In one scene, set in a dingy industrial building on the outskirts of Los Angeles, we find Dorian Gray and his friends looking across rows of Dewar flasks, in which the heads and bodies of the dead are kept frozen, waiting for the day when medical science has advanced far enough to cure their ailments. Although one of Dorian’s friends doubts that technology will ever be able to repair the damage caused when the body parts are thawed out, another friend – Fergus the Ferret – is more optimistic.

– Course they will, the Ferret yawned; Dorian says they’ll do it with nannywhatsit, little robot thingies – isn’t that it, Dorian?

– Nanotechnology, Fergus – you’re quite right; they’ll have tiny hyperintelligent robots working in concert to repair our damaged bodies.

This view that nanotechnology will lead to tiny robotic submarines navigating our bloodstream is ubiquitous, and images like that in figure 1 are frequently used to illustrate stories about nanotechnology in the press. Yet today’s products of nanotechnology are much more mundane – stain-resistant trousers, better sun creams and tennis rackets reinforced with carbon nanotubes. There is an almost surreal gap between what the technology is believed to promise and what it actually delivers.

The reason for this disparity is that most definitions of nanotechnology are impossibly broad. They assume that any branch of technology that results from our ability to control and manipulate matter on length scales of 1-100 nm can be counted as nanotechnology. However, many successes that are attributed to nanotechnology are merely the result of years of research into conventional fields like materials or colloid science. It is therefore helpful to break up the definition of nanotechnology a little.

What we could call “incremental nanotechnology” involves improving the properties of many materials by controlling their nano-scale structure. Plastics, for example, can be reinforced using nano-scale clay particles, making them stronger, stiffer and more chemically resistant. Cosmetics can be formulated such that the oil phase is much more finely dispersed, thereby improving the feel of the product on the skin. These are the sorts of commercially available products that are said to be based on nanotechnology. The science underlying them is sophisticated and the products are often big improvements on what has gone before. However, they do not really represent a decisive break from the past.

In “evolutionary nanotechnology” we move beyond simple materials that have been redesigned at the nano-scale to actual nano-scale devices that do something interesting. Such devices can, for example, sense the environment, process information or convert energy from one form to another. They include nano-scale sensors, which exploit the huge surface area of carbon nanotubes and other nano-structured materials to detect environmental contaminants or biochemicals. Other products of evolutionary nanotechnology are semiconductor nanostructures – such as quantum dots and quantum wells – that are being used to build better solid-state lasers. Scientists are also developing ever more sophisticated ways of encapsulating molecules and delivering them on demand for targeted drug delivery.

Taken together, incremental and evolutionary nanotechnology are driving the current excitement in industry and academia for all things nano-scale. The biggest steps are currently being made in evolutionary nanotechnology, more and more products of which should appear on the market over the next five years.

Grey goo and radical nanotechnology

But where does this leave the original vision of nanotechnology as articulated by Eric Drexler? Back in 1986 Drexler published an influential book called Engines of Creation: The Coming Era of Nanotechnology, in which he imagined sophisticated nano-scale machines that could operate with atomic precision. We might call this goal “radical nanotechnology”. Drexler envisaged a particular way of achieving radical nanotechnology, which involved using hard materials like diamond to fabricate complex nano-scale structures by moving reactive molecular fragments into position. His approach was essentially mechanical, whereby tiny cogs, gears and bearings are integrated to make tiny robot factories, probes and vehicles (figure 2).

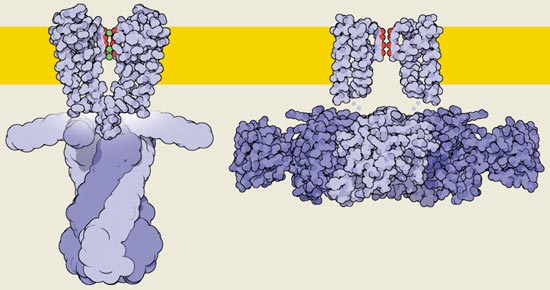

Drexler’s most compelling argument that radical nanotechnology must be possible is that cell biology gives us endless examples of sophisticated nano-scale machines. These include molecular motors of the kind that make up our muscles, which can convert chemical energy to mechanical energy with astonishingly high efficiencies. There are also ion channels (see figure 3) and ion pumps that can control the flow of molecules through membranes. Other examples include ribosomes – molecular structures that can construct protein molecules, amino acid by amino acid, with ultimate precision according to the instructions on DNA.

Drexler argued that if biology works as well as it does, researchers ought to be able to do much better. Biology, after all, uses unpromising soft materials – proteins, lipids and polysaccharides – and random design methods that are restricted by the accidents of evolution. Motion is created by changes to the shapes of these molecules, rather than through the cogs and pistons of macroscopic engineering. Furthermore, molecules are moved around through their continual bombardment by other molecules – what is known as Brownian motion – rather than via pipes and tubes. We researchers, however, have the best materials at our disposal. Surely we can create what are, in effect, synthetic life forms that can reproduce and adapt to the environment and overcome “normal” life in the competition for resources?

Drexler’s book raised one big spectre. By engineering a synthetic life form that could create runaway self-replicating machines, we might eventually render all normal life extinct. Could we make, by accident or malevolent design, a plague of self-replicating nanorobots that spreads across the biosphere, consuming its resources and rendering life, including ourselves, extinct? This scary possibility was dubbed by Drexler as the “grey goo” scenario. It is what triggered much of the public’s doubts about nanotechnology and was the inspiration for Michael Crichton’s novel Prey, which is shortly to be turned into a film.

However, many scientists simply dismissed Drexler’s visions of tiny nano-scale robots as science fiction, so self-evidently absurd as not to be worth considering. Indeed, Drexler himself has recently declared that self-replicating machines are not, after all, necessary for molecular nanotechnology (see Phoenix and Drexler in further reading).

Flaws in Drexler’s vision

It is nevertheless worth examining the shortcomings of Drexler’s original vision because this may give clues as to how we might make radical nanotechnology feasible. Why, for example, do illustrations of nanosubmarines look so absurd to a scientific eye? The reason is that these pictures assume that the engineering that we employ on macroscopic scales can simply be scaled down to the nano-scale. But physics looks very different at such dimensions. Designs that function well in our macroscopic world will work less and less well as they shrink in size. A nanosubmarine would operate in a very different environment to its macroscopic counterpart.

Small objects have lower Reynolds numbers – a dimensionless quantity proportional to the ratio of the product of the size and flow speed to viscosity. The dominating force opposing motion therefore arises from viscosity rather than inertia. Fluid molecules, meanwhile, will continually bombard the object because of Brownian motion. The submarine would therefore be perpetually jostled around, while its internal parts and mechanisms would bend and flex in constant random motion. Another difference at the nano-scale is that surface forces are very strong: the nanosubmarine would probably just stick to the first surface it encountered. These three factors – low Reynolds numbers, ubiquitous Brownian motion and strong surface forces – are what makes nano-scale design very challenging, at least at ambient temperatures in the presence of water.

So is radical nanotechnology simply impossible? What biology teaches us is that, contrary to Drexler’s implicit position, life is highly optimized, by billions of years of evolution, for the particular type of physics that operates at the nano-scale. The principles of self-assembly and molecular shape change that cell biology uses so extensively exploit the special physics of the nanoworld – namely ubiquitous Brownian motion and strong surface forces. In other words, if we want to fulfil the goals of radical nanotechnology, we should use soft materials and biological design paradigms. We should also stop worrying about grey goo, because it is going to be very hard to produce more highly optimized nano-scale organisms than nature has already achieved.

The path to radical nanotechnology

Even if the most extreme visions of the nanotechnology evangelists do not come to pass, nanotechnology – in the form of machines structured on the nano-scale that do interesting and useful things – will certainly play a growing part in our lives over the next half-century. How revolutionary the impact of these new technologies will be is difficult to say. Scientists almost always greatly overestimate how much can be done over a 10 year period, but underestimate what can be done in 50 years.

Sometimes the contrast between the grand visions of nanotechnology – robotic nanosubmarines repairing our bodies – and the reality it delivers – say an improved all-in-one shampoo and conditioner – has a profoundly bathetic quality. But the experience we will gain in manipulating matter on the nano-scale in industrial quantities is going to be invaluable. Similarly, there is no point being dismissive about the fact that lots of early applications of nanotechnology will be essentially toys – whether for children or adults – just as data-storage technology is currently being driven forward by the needs of digital TV recorders and portable music players like Apple’s iPod. These apparently frivolous applications will provide the incentive and resources to push the technology further.

But which design philosophy of radical nanotechnology will prevail – Drexler’s original “diamondoid” visions or something closer to the marvellous contrivances of cell biology? One way of finding the answer would be to simply develop the existing technologies that have driven the relentless miniaturization of microelectronics. This “top-down” approach, which uses techniques like photolithography and etching, has already been used to make so-called microelectromechanical systems (MEMS). Such systems are commercially available and have components on length scales of many microns – the acceleration sensors in airbags being a well known example. All we need to do now is shrink these systems even further to create true nanoelectromechanical systems, or NEMS (see Roukes in further reading).

The advantage of this top-down approach is that a massive amount of existing technology and understanding is already in place. The investment, both in terms of plant, and research and development, is currently huge, driven as it is by the vast economic power of the electronics and computing industries. But, as we have seen, the disadvantage is that there are both physical and economic bounds to how small this technology can go. Although industry has shown extraordinary ingenuity in overcoming seemingly insurmountable barriers already – new ultraviolet light sources and phase-shifting masks have made feature sizes below 100 nm a commercial reality – maybe its luck will soon run out. A more fundamental problem is the importance in the nanoworld of Brownian motion and surface forces. Strong surface forces may make the moving parts of a NEMS device stick together and seize up.

Taking a lead from nature

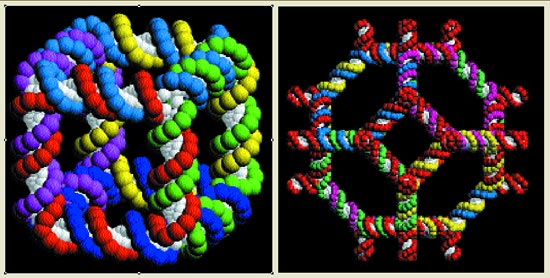

So how could we follow biology’s example and work with the “grain” of the nanoworld? The most obvious method is simply to exploit the existing components that nature gives us. One way would be to deliberately remove and isolate from their natural habitats a number of components, such as molecular motors, and then incorporate them into artificial nanostructures. For example, Nadrian Seeman at New York University and others have shown how the self-assembly properties of DNA can be used to create quite complicated nano-scale structures and devices (figure 4). Another approach would be to start with a whole, living organism – probably a simple bacterium – and then genetically engineer a stripped-down version that contains only the components that we are interested in.

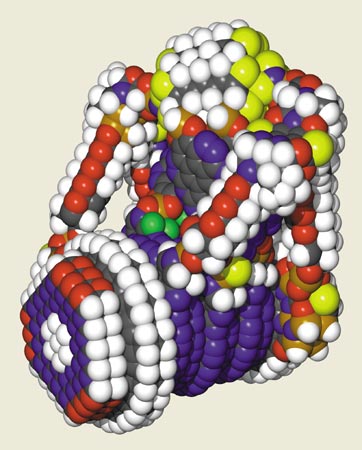

One can think of this approach – often called “bionanotechnology” – as the Mad Max or Scrap Heap Challenge approach to nano-engineering. We are stripping down and then partially reassembling a very complex and only partially understood system to obtain something else that works. This approach exploits the fact that evolution – nature’s remarkable optimization tool – has produced very powerful and efficient nanomachines. We now understand enough about biology to be able to separate out a cell’s components and to some extent utilize them outside the context of a living cell – as illustrated in the work of Carlo Montemagno at the University of California at Los Angeles and Harold Craighead from Cornell University (figure 5). This approach is quick and the most likely way to achieve radical nanotechnology soon.

As we learn more about how bionanotechnology works, it should be possible to use some of the design methods of biology and apply them to synthetic materials. Like bionanotechnology, such “biomimetic nanotechnology” would work with the grain of the special physics of the nanoworld. Of course, the task of copying even life’s simplest mechanisms is formidably hard. Proteins, for example, function so well as enzymes because the particular sequence of amino acids has been selected by evolution from a myriad of possibilities. So when designing synthetic molecules, we need to take note of how evolution achieved this.

But despite the difficulties, biomimetic nanotechnology will let us do some useful – if crude – things. For example, ALZA, a subsidiary of Johnson and Johnson, has already been able to wrap a drug molecule in a nanoscopic container – in this case a spherical shell made from double layers of phospholipid molecules – and transport it to where it is required in the body. The container can then be made to open and release its bounty.

I do not think that Drexler’s alternative approach – based on mechanical devices made from rigid materials – fundamentally contradicts any physical laws, but I fear that its proponents underestimate the problems that certain features of the nanoworld will pose for it. The close tolerances that we take for granted in macroscopic engineering will be very difficult to achieve at the nano-scale because the machines will be shaken about so much by Brownian motion. Finding ways for surfaces to slide past each other without sticking together or feeling excessive friction is going to be difficult. Unlike the top-down route using silicon, we have no large base of experience and expertise to draw on, and no big economic pressures driving the research forward. And unlike the bionanotechnological and biomimetic approaches, it is working against the grain, rather than with the grain, of the special physics of the nanoworld. Drexler’s approach to radical nanotechnology, in other words, is the least likely to deliver results.

Concerns and fears

Assuming that some kind of radical nanotechnology is possible and feasible, the question is whether we should even want these developments to take place. Some 50 years ago it was generally taken for granted that scientific progress was good for society, but this is certainly not the case now. In some quarters, there are calls for a cautious approach to nanotechnology; at the most extreme, there are demands for a complete moratorium on the development of the technology. In the light of these concerns, the UK government last year asked the Royal Society and the Royal Academy of Engineering to carry out a major survey into the benefits and possible problems of nanotechnology. The report, which is based on extensive collaboration with the public, has just been published.

There are two key concerns as far as the public is concerned. The first relates to the kind of incremental nanotechnology that is already at or near market – namely that finely divided matter might be intrinsically more toxic than the forms in which we normally encounter it. If the properties of matter are so dramatically affected by size, the argument goes, matter that is harmless in bulk quantities might be more toxic and more effective at getting into our bodies when it is in the form of nano-scale particles.

We know that the physical form of a material can drastically affect its toxicity. One sobering example is asbestos, which comes in two chemically identical forms – serpentine and chrysotile asbestos. While the former is a harmless mineral that consists of flat sheets of atoms, the latter contains nano-scale tubes of atoms. Exposure to this tubular form is what has killed so many people from lung cancer and other diseases. Carbon nanotubes, like chrysotile, are the rolled-up version of a sheet-forming mineral that itself is not toxic – in this case, graphite. Although we have no definitive evidence that carbon nanotubes are dangerously toxic, prudence certainly suggests that we should be careful when handling them. After all, every new material has the potential to be toxic.

Regulations controlling the introduction of new materials into the workplace and the environment are, rightly, much stricter now than in the past, and we should appreciate that the properties of materials depend on their physical manifestation as well as their chemical content. But we do not have to assume that all nano-scale materials are inherently dangerous. Imposing a blanket ban would be absurd and unenforceable, simply because we have enough experience of many forms of nanoparticles to know they are safe. If we wanted to avoid nanoparticles completely, we would have to give up drinking milk, full as it is of nano-scale casein particles.

Evolutionary nanotechnology is certainly going to lead to far-reaching changes in society, which we should get to grips with now. It will allow computing that is so cheap and powerful that every product or gadget – no matter the price – will be able to process, sense and transmit information. Radio-frequency identification chips, which are already available, are just the beginning. But the prospect of cheap, powerful, computing – when combined with mass storage and automated image processing – is a totalitarian’s dream and a libertarian’s nightmare.

The public’s second big fear of nanotechnology – beyond these concrete social, environmental and economic factors – concerns the proper relationship between man and nature. Is it right to take living organisms from nature and then reassemble and reconstruct their most basic structures, possibly with additional synthetic components? By replacing living parts of the body with man-made artefacts, are we blurring the line between man and machine? These fears are at the root of the most far-reaching concern about nanotechnology – the grey-goo problem. Of course, fear of loss of control is a primal fear about any technology. The question is whether it is realistic to worry about it.

We should be clear about what this proposition implies: that we can out-engineer evolution by making an entirely synthetic form of life that is better adapted to the Earth’s environment than life itself is. Such a feat is unrealistic in the next 20 years – and probably for a lot longer. We simply do not have a detailed enough knowledge of how life itself works. We have the “parts list”, but very little understanding of how it all fits together and operates as a complex system. Still, our appreciation of how nature engineers at the nano-scale will grow rapidly, and attempts to mimic some of the functions of life will help us to appreciate how biology operates.

But is it even possible in principle to develop a different form of life that works better than the one that currently exists? To find out, we need to take a view on how perfectly adapted life is to its environment. We need to know how many times life got started and how many alternative schemes were tried and failed. We need to know if any of these other schemes, which might possibly have been eliminated by chance or accident, could have done better. Evolution is a very efficient way of finding the optimal solution to the problem of life. Does it always find the best possible solution? Maybe not, but I would be very surprised if we can do better.

Richard Jones is in the Department of Physics and Astronomy, University of Sheffield, Hicks Building, Hounsfield Road, Sheffield S3 7RH, UK, e-mail r.a.l.jones@sheffield.ac.uk. This article is based on the final chapter in Jones's new book Soft Machines: Nanotechnology and Life, which is published this month by Oxford University Press

- Turbulence causes swimming algae to congregate in dense patches, say physicists

- A model approach to climate change

- Turbulent transition for fluids

- Physicists Use Soap Bubbles to Study Black Holes

- The future of nanotechnology

- List of Scopus Indexed Journals in Physics and Astronomy